Unveiling the Power of the Matrix Product: A Comprehensive Guide

In the realm of linear algebra, the matrix product stands as a fundamental operation, underpinning a vast array of applications across various scientific and engineering disciplines. From computer graphics and machine learning to physics and economics, understanding the mechanics and implications of the matrix product is crucial for anyone seeking to leverage the power of mathematical computation. This article aims to provide a comprehensive overview of the matrix product, exploring its definition, properties, computational aspects, and real-world applications. The goal is to demystify this essential operation and empower readers with the knowledge to effectively utilize it in their respective fields.

Defining the Matrix Product

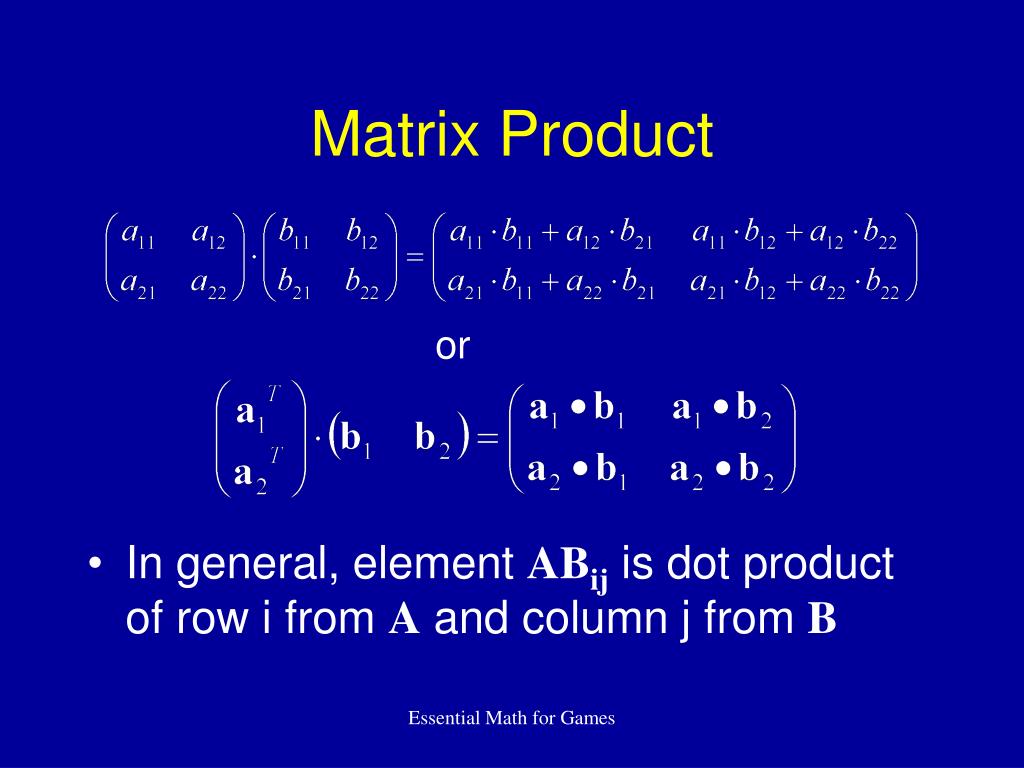

The matrix product, often referred to as matrix multiplication, is a binary operation that produces a matrix from two input matrices. Unlike element-wise operations, the matrix product involves a more complex process of combining rows from the first matrix with columns from the second. Specifically, if A is an m x n matrix and B is an n x p matrix, their matrix product, denoted as AB, is an m x p matrix. The element in the i-th row and j-th column of AB is calculated by taking the dot product of the i-th row of A and the j-th column of B.

Mathematically, the element (AB)ij is given by:

(AB)ij = Σk=1n AikBkj

Where:

- Aik is the element in the i-th row and k-th column of matrix A.

- Bkj is the element in the k-th row and j-th column of matrix B.

- n is the number of columns in matrix A and the number of rows in matrix B.

It is crucial to note that the matrix product is only defined if the number of columns in the first matrix is equal to the number of rows in the second matrix. This condition ensures that the dot product operation can be performed. If this condition is not met, the matrix product is undefined.

Properties of the Matrix Product

The matrix product possesses several important properties that distinguish it from scalar multiplication and other matrix operations. These properties are essential for understanding how the matrix product behaves and for manipulating matrix expressions effectively. Key properties include:

- Associativity: The matrix product is associative, meaning that for matrices A, B, and C, (AB)C = A(BC). This property allows for the grouping of matrix multiplications without affecting the result.

- Distributivity: The matrix product is distributive over matrix addition. This means that for matrices A, B, and C, A(B + C) = AB + AC and (A + B)C = AC + BC.

- Non-Commutativity: Unlike scalar multiplication, the matrix product is generally not commutative. In other words, AB ≠ BA in most cases. The order of multiplication matters significantly and can lead to different results. Understanding this non-commutative nature is crucial for avoiding errors in matrix calculations.

- Identity Matrix: The identity matrix, denoted by I, is a square matrix with ones on the main diagonal and zeros elsewhere. For any matrix A, AI = IA = A. The identity matrix acts as the multiplicative identity in matrix algebra.

- Transpose: The transpose of a matrix product is the matrix product of the transposes in reverse order. That is, (AB)T = BTAT. This property is useful when dealing with transposed matrices and their products.

These properties are fundamental to many matrix operations and are essential for working with linear transformations and solving systems of linear equations.

Computational Aspects of the Matrix Product

Computing the matrix product can be computationally intensive, especially for large matrices. The standard algorithm for matrix product has a time complexity of O(n3), where n is the dimension of the matrices. This complexity arises from the nested loops required to compute each element of the resulting matrix.

However, various algorithms have been developed to improve the efficiency of matrix product, particularly for very large matrices. Some of these algorithms include:

- Strassen Algorithm: The Strassen algorithm reduces the time complexity to approximately O(n2.81) by dividing the matrices into smaller submatrices and performing a series of recursive multiplications and additions.

- Coppersmith–Winograd Algorithm: The Coppersmith–Winograd algorithm and its variants offer even lower time complexities, although they are often more complex to implement and may not be practical for smaller matrices.

- Parallel Computing: Parallel computing techniques can significantly speed up matrix product by distributing the computations across multiple processors or cores. This approach is particularly effective for large matrices and can achieve near-linear speedup with the number of processors.

The choice of algorithm depends on the size of the matrices, the available computational resources, and the desired level of accuracy. For most practical applications, optimized libraries like BLAS (Basic Linear Algebra Subprograms) and LAPACK (Linear Algebra PACKage) provide highly efficient implementations of matrix product and other linear algebra operations. [See also: BLAS and LAPACK Libraries]

Applications of the Matrix Product

The matrix product finds extensive applications in a wide range of fields, including:

- Computer Graphics: In computer graphics, the matrix product is used for transformations such as rotation, scaling, and translation of objects in 2D and 3D space. Transformation matrices are multiplied together to create complex transformations, and these transformations are then applied to the vertices of the objects to render them on the screen.

- Machine Learning: The matrix product is a fundamental operation in many machine learning algorithms, including neural networks. Neural networks use matrices to represent weights and biases, and the matrix product is used to perform forward propagation and backpropagation.

- Physics: In physics, the matrix product is used to represent linear transformations, such as rotations and reflections, in quantum mechanics and classical mechanics. It is also used to solve systems of linear equations that arise in various physical problems.

- Economics: In economics, the matrix product is used to model economic systems and to analyze the relationships between different economic variables. For example, input-output models use matrices to represent the flow of goods and services between different industries.

- Network Analysis: The matrix product is used to analyze networks, such as social networks and transportation networks. For example, adjacency matrices can be multiplied to determine the number of paths between different nodes in a network.

- Image Processing: The matrix product is used in various image processing tasks, such as image filtering and image compression. For example, convolution operations, which are used to blur or sharpen images, can be implemented using the matrix product.

Examples of Matrix Product in Action

To illustrate the practical application of the matrix product, consider a simple example in computer graphics. Suppose we want to rotate a point (x, y) by an angle θ counterclockwise around the origin. This can be achieved by multiplying the point’s coordinate vector by a rotation matrix:

The resulting point (x’, y’) is given by:

This example demonstrates how the matrix product can be used to perform geometric transformations in computer graphics. Similar techniques are used to perform scaling, translation, and other transformations.

Another example can be found in machine learning. Consider a simple neural network with one hidden layer. The output of the hidden layer is calculated by multiplying the input vector by a weight matrix and then applying an activation function. The output of the network is then calculated by multiplying the output of the hidden layer by another weight matrix and applying another activation function. The matrix product is a fundamental operation in both of these calculations.

Conclusion

The matrix product is a powerful and versatile operation that plays a crucial role in many scientific and engineering disciplines. Understanding its definition, properties, and computational aspects is essential for anyone seeking to leverage the power of mathematical computation. From computer graphics and machine learning to physics and economics, the matrix product provides a fundamental tool for modeling and solving complex problems. While computationally intensive for large matrices, various algorithms and optimized libraries are available to improve efficiency. As technology continues to advance, the matrix product will undoubtedly remain a cornerstone of scientific computing and a vital tool for innovation.